What Australia can learn from the UK about keeping kids off social media

The Australian government is planning to introduce minimum age limits for children to use social media websites, amid concerns over the mental health of young people.

An age verification trial would take place before any legislation can be advanced, Prime Minister Anthony Albanese said, but there are few details on what this will look like.

It comes as experts around the world have warned that social media may be harming children’s mental and physical wellbeing; from UK ministers considering a ban on the sale of smartphones to children under 16, to US surgeon general Vivek Murthy warning that social media can increase the risk of anxiety and depression in children.

Albanese told the Australian Broadcasting Corp: "I want to see kids off their devices and onto the footy fields and the swimming pools and the tennis courts.

"We want them to have real experiences with real people because we know that social media is causing social harm."

He suggested that the age limit would likely be between 14 and 16. Most social media websites currently restrict use to people over 13, but few enforce age verification measures such as ID checks. This means many children can, and do, set up social media profiles with false information to get around the restrictions.

Australia would become one of the first countries to impose an age limit on social media when the law comes into force. According to statistics from data analytics firm Meltwater, there are more than 25 million internet users in Australia, equivalent to nearly 95% of the population.

Of this, 76% of 15 to 17-year-old Australian children have their own social media account, as well as 51% of 12 to 14-year-olds, a 2020 survey revealed. A more recent study by the University of Sydney found that three-quarters of Australians aged 12 to 17 have used YouTube or Instagram.

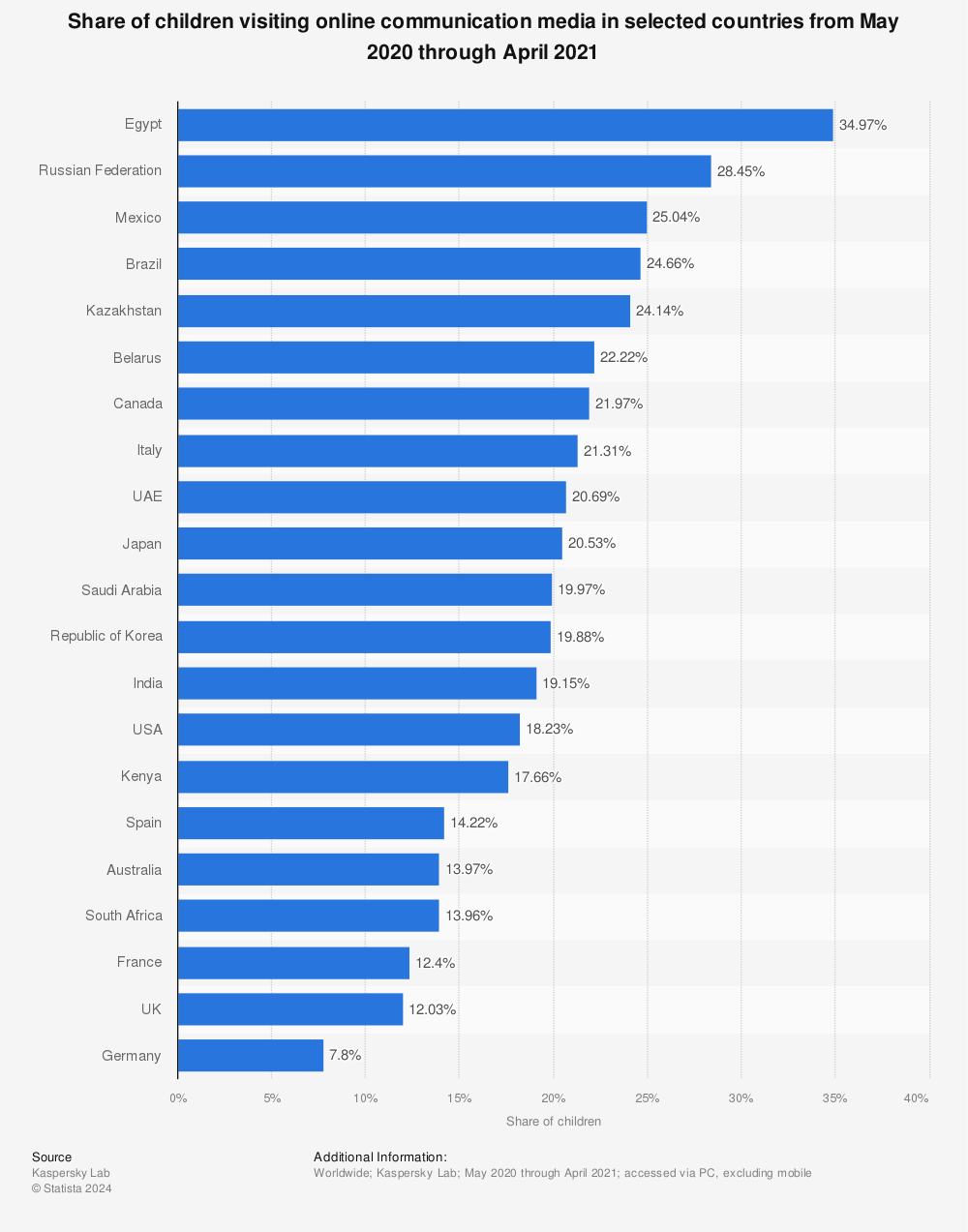

While global statistics by Statista show Australia is far from having the largest share of children accessing online content - that title belongs to Egypt, which had nearly 35% of children using digital communications content online - but it has a bigger share than Germany, the UK, France and South Africa.

In 2021, Germany had just 7.8% of children accessing online communications media, while the UK had 12.03%. This was followed by France (12.4%), South Africa (13.96%), and then Australia (13.97%).

What are the social media laws in the UK?

The UK brought the Online Safety Act into law last October with the goal of improving online safety for everyone in the country. The law places a duty on internet platforms to have systems and processes in place to manage harmful content on their sites.

According to the Department for Science, Innovation and Technology, the Act will "make the UK the safest place in the world to be a child online". "Platforms will be required to prevent children from accessing harmful and age-inappropriate content and provide parents and children with clear and accessible ways to report problems online when they do arise."

Ofcom set out guidance on age verification to stop children from accessing online pornography, which includes methods like sharing bank information to confirm they are over 18, photo identification matching, facial age estimation, mobile network operator age checks, credit card checks, and digital identity wallets.

The communications regulator considered self-declaration of age, online payments that don’t require a person to be 18, and general terms, disclaimers and warnings to be weak age-checks that don’t meet their standards.

Ofcom also published guidance on the new duties that internet services, like social media networks, and search engines need to fulfil when it comes to content that is harmful to children, including how to assess if the service is likely to be accessed by children, the causes and impacts of harms to children, and how services should assess and mitigate the risks of harms to children.

The Online Safety Act also requires social media companies to enforce age limits consistently and protect child users. They must ensure child users have age-appropriate experiences and are shielded from harmful content.

Websites with age restrictions need to specify what measures they use to prevent underage access in their terms of service. This means they can no longer just state that their service is for users above a certain age but do nothing to prevent younger people from accessing it.

What are the social media laws in Germany?

Germany passed a law on the reform of youth protection in the media, called the Youth Protection Act, in 2021. According to international law firm Osborne Clarke, the law requires age verification for online content "when there is a real potential for danger or impairment".

It also makes it mandatory for platform providers to implement precautionary measures to mitigate the risks. "These include child-friendly terms and conditions [and] safe default settings for the use of services that limit the risks of use depending on age," writes Jutta Croll, chairwoman of the Board of the German Digital Opportunities Foundation in an article for London School of Economics (LSE).

In order to comply with the law, social media providers, video-sharing services and communication services that store users’ communication content "must implement effective structural precautionary measures to shield minors from inappropriate content".

Users must also be able to report and submit complaints about inappropriate content or content deemed harmful for minors.

More broadly across the European Union (EU), the Digital Services Act (DSA) came into force last year, giving the bloc more powers to police content on social media. The DSA forces any digital operation serving the EU to be legally accountable for all content.

Platforms are banned from targeting children with advertising based on their personal data or cookies, and must redesign their content "recommender systems", or algorithms, to reduce harm to children.

The DSA also obliges all big online platforms, such as Instagram, TikTok, YouTube, as well as large search engines like Google and Bing, to "identify and assess the potential online risks for young children and young people" and to put measures in place to mitigate these risks.

These measures should include parental controls, age verification systems, and tools to help young people get support or report abuse.

Read more about social media and children:

When should children be on social media? Penelope Cruz brands it a 'cruel experiment' (Yahoo Life UK, 6-min read)

How to keep children safe around mobile phones as schools could ban them (Yahoo Life UK, 5-min read)

Huge survey finds social media delivers major blow to Britons’ self-esteem (Yahoo Life UK, 6-min read)