Why Google adding AI to its searches is a really big deal

What’s happening

Over the past couple of decades, Google’s search engine has been arguably the single most important force in dictating what the modern internet looks like, to the point where the company’s name has become the literal dictionary definition of finding something on the web.

The Big Tech giant recently began rolling out a dramatic change to how its searches work in a move that could have a massive impact, not just for Google itself, but for the entire online ecosystem.

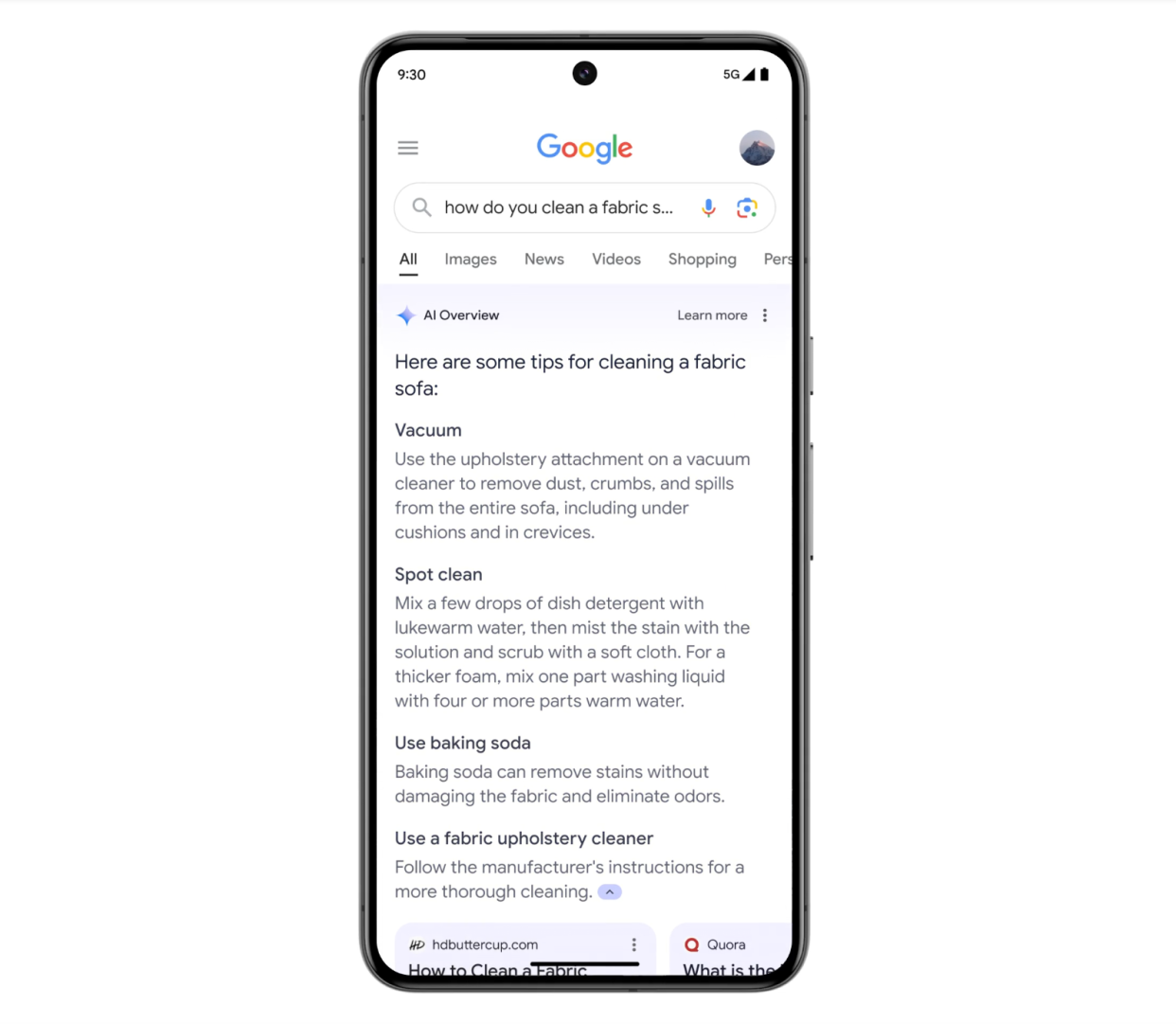

Starting earlier this month, responses generated by artificial intelligence began showing up above the usual list of blue links on certain search queries. These answers, called AI Overviews, use Google’s AI model Gemini to compile information from across the web to provide a succinct answer with zero scrolling or extra clicks.

Like other AI models, Gemini has access to a massive cache of online information that it taps to generate its responses to, in the company’s words, “Let Google do the searching for you.” So when you enter something like “exercise for knee pain,” Gemini pulls from the enormous amount of data it has on the topic to provide a list of stretches and strength-training moves while sparing you the effort of clicking through to any individual websites.

There’s nothing unique about Google adding AI to search. Just about every major tech company, Yahoo included, has been integrating AI into more and more online experiences in recent months. But changes to Google search just matter more because of how critical it is to how we use the internet. More than 90% of global search traffic — 8.5 billion searches per day — happens on Google. A huge share of the internet economy is also built around drawing eyeballs and clicks through Google search.

Why there’s debate

Since its U.S. launch, most of the conversation about AI Overviews has been about bad results it’s been producing. Among other things, Google search has suggested adding glue to pizza sauce, eating rocks for digestive health and claimed that astronauts have encountered cats on the moon. This phenomenon, known as hallucination, is something all AI models struggle with. But many tech experts worry about the implications of the world’s most important knowledge engine suddenly being populated with unreliable — or even potentially dangerous — falsehoods.

AI optimists say Overviews, while imperfect, still represent a future where people can access the information they need more effectively and efficiently than ever before. They argue that its issues with bad information are just a short-term problem and it will become increasingly accurate over time as Google locates and remedies its shortcomings, which the company is reportedly working aggressively to do right now.

Some of the biggest concerns about Overviews, though, have nothing to do with its accuracy. Some experts worry that, with AI summaries at the top of their searches, users will stop clicking on the links that also surface, which would mean fewer eyeballs — and ultimately, less revenue — for any site that relies on search traffic to support its business. They fear that Overviews could eventually drive online publishers — everything from news services to entertainment blogs to recipe blogs — out of business, leaving behind no one to create the information that the AI needs to produce its answers.

What’s next

Overviews is currently available in the U.S., but Google says it expects to open it up to at least 1 billion of its global users by the end of the year.

The company has also previewed new AI-powered features it says it plans to add to search in the near future, including schedule planning, the ability to answer highly-specific questions and the power to search with a video rather than words.

Perspectives

The economy of the internet is at risk

“If the A.I. answer engine does its job well enough, users won’t need to click on any links at all. Whatever they’re looking for will be sitting right there, on top of their search results. And the grand bargain on which Google’s relationship with the open web rests — you give us articles, we give you traffic — could fall apart.” — Kevin Roose, New York Times

The average person will probably learn to love AI Overviews

“I suspect billions of people will be happy to receive their answers to complicated queries directly on the search results page, uninterested in where the information comes from, so long as it’s accurate enough.” — Casey Newton, Platformer

Google doesn’t care about informing its users anymore

“Providing a sturdy, almost necessary web-search service is no longer the priority, not that it has been for years. Google instead wants to blitz you with buggy new gizmos whose basic functionalities lack everything that made Google an empire, a verb, a dependable custodian of the information superhighway.” — Nitish Pahwa, Slate

AI is helping restore Google search to its original purpose

“Generative AI … is in some ways providing a return to what Google Search was before the company infused it with product marketing and snippets and sidebars and Wikipedia extracts — all of which have arguably contributed to the degradation of the product. The AI-powered searches that Google executives described didn’t seem like going to an oracle so much as a more pleasant version of Google: pulling together the relevant tabs, pointing you to the most useful links, and perhaps even encouraging you to click on them.” — Matteo Wong, The Atlantic

AI may eventually kill off all of the content it needs to survive

“By making it even less inviting for humans to contribute to the web's collective pool of knowledge, Google's summary answers could also leave its own and everyone else's AI tools with less accurate, less timely, and less interesting information.” — Scott Rosenberg, Axios

Google is rolling out AI to a lot of people who aren’t ready for it

“This feature will likely expose billions of people, who have never used a chatbot before, to AI-generated text. Even though AI Overviews are designed to save you time, they might lead to less trustworthy results.” — Reece Rogers, Wired

People can’t know whether to trust information if it’s pulled from its original context

“You’re not engaging with the original source or where that came from. You’re not seeing comments, you’re not even seeing who the author is. And I think those things are really critical for digital media literacy.” — Stuart Geiger, UC San Diego data science professor, to Marketplace

No matter how much Big Tech pushes AI, human-made content will ultimately win out

“Users will quickly learn to recognize AI-generated content, and will increasingly find it less interesting and appealing compared to human-generated content. Just like a great novelist, journalists have a voice and style that people find interesting, and this will become even more obvious as AI content sets a less interesting baseline.” — Rob Meadows, chief technology officer at OpenWeb, to CNET